Introduction

Deploying cloud infrastructure and services should be as easy as committing to Git but for most teams, it’s a messy mix of YAMLs, Terraform, and tribal knowledge. Here’s how we unified it. This blog post is based on my recent talk at DevOpsCon London, where I discussed how to manage and ship infrastructure and services more efficiently within a single Git flow. The goal is to tackle common pain points in modern DevOps practices by unifying infrastructure and service definitions and automating their deployment.

Key Issues Organizations Encounter

In many organizations, especially as they scale, several challenges emerge in managing infrastructure and services:

Disjointed Infrastructure Declaration: Each project often has its own way of declaring service infrastructure. This leads to inconsistencies, potential security vulnerabilities due to deviations from best practices, and the over-provisioning of resources. You might have seen new services struggling to deploy because another service has monopolized CPU resources.

Laborious and Unversioned Change Management: Changes to service infrastructure are typically handled through YAML files, making system-wide changes difficult to manage and track effectively in Git.

Language Mismatch for Cloud Infrastructure: Defining service infrastructure often involves Go templating for Helm, while cloud infrastructure typically requires different languages like HCL for Terraform. This means teams need to be proficient in multiple declarative languages.

Separation of Cloud Infrastructure and Services: The management of cloud infrastructure is often completely separate from service changes. This makes it difficult to link new features directly to their required infrastructure, leading to deployment errors and difficulties in tracking changes.

Multiple Deployment Workflows: Deploying infrastructure (e.g., Terraform plans) and services (e.g., Helm upgrades) typically require different, separate workflows. This increases management overhead and introduces more points of failure.

Ambiguous Roles and Responsibilities: In larger organizations with distinct roles for developers, platform engineers, and DevOps teams, it becomes unclear who owns what. A feature requiring both service changes and new infrastructure (such as an RDS instance) might necessitate changes in a central “platform-infra” repository, which can be contentious for platform engineers, as it impacts the entire organization.

Our Approach to Streamline DevOps

To address these challenges, we propose a multi-faceted approach involving Helm, Crossplane, ArgoCD, and the concept of “Composition.”

1. Service Infrastructure De-duplication through Modular Helm Charts

The first step is to tackle the problem of redundant service infrastructure declarations and lack of version control.

The Solution: We modularize Helm charts by moving service templates from individual service repositories into a central “platform-infra” repository. This “platform-infra” repository acts as a central place where common service configurations are defined as Helm charts.

Subcharts in Helm: Rather than duplicating YAML values in each service, these central Helm charts are used as subcharts. A service’s chart.yaml can declare a dependency on this subchart, specifying its version. Values are passed to the subchart using aliases.

Packaging Helm Charts as OCI Images: Since Git URLs aren’t natively supported for Helm chart dependencies, we package Helm charts as OCI images (e.g., a .tgz file, which is a valid OCI repository) and push them to a container registry like ECR.

The `

helm package .`creates this.tgzfile.When pushed to ECR, the Helm chart’s version defined in chart.yaml automatically becomes the image tag. The ECR repository is structured with a namespace (e.g., unified-management/microservices) and the chart name as the repo name.

The Benefits:

Centralized and Versioned: Service infrastructure changes are now in one place and versioned.

Reduced Redefinition: Any repository needing a service configuration can simply use the modularized Helm chart as a subchart, eliminating redefinition.

Enforced Standards: System-wide changes, like enforcing CPU limit ranges, can be done in one place in the Helm chart, and a version upgrade will enforce it across all consuming repositories.

2. Bridging Infrastructure and Service Definitions with Crossplane

Next, we address the challenge of managing cloud infrastructure in a different language and disjointed from service changes.

Introducing Crossplane: Crossplane is a CNCF project (an open-source foundation that fosters and maintains key cloud-native technologies like Kubernetes, Prometheus, and Envoy) that extends Kubernetes to manage external cloud resources. It provides a control plane that can manage resources across various environments (like AWS, Azure, or even a Domino’s Pizza API, if it has one!) via their APIs.

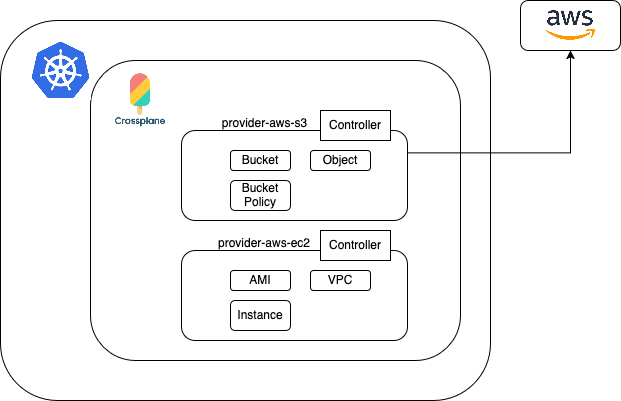

How Crossplane Works: It leverages Kubernetes’ control plane, controller, and reconciliation loop. Crossplane uses “providers” (e.g., provider-aws-s3, provider-aws-ec2), where each provider manages a subset of resources for scalability.

YAML for Infrastructure: The key advantage is that Crossplane accepts YAML files for infrastructure definitions. This eliminates the need for Terraform’s HCL, allowing infrastructure to be defined in the same language as services. Upbound, a company that created many Crossplane providers, used a tool to convert Terraform definitions into providers, so if you know Terraform, you can apply that knowledge in Crossplane.

Unifying Definitions

Infrastructure YAML files (e.g., for ECR, RDS, S3) are stored alongside service definitions, typically in a cloud-infra folder.

Helm Templating for Infrastructure: We use Helm templating to write our infrastructure definitions, allowing the same logic of iteration, range operators, and if conditions to be applied to both infrastructure and service definitions. This allows for dynamic generation of infrastructure based on values.

Why It Matters

Single Language: Cloud and service infrastructure are now declared in the same YAML language, leveraging the same Helm templating capabilities.

Single Unit of Deployment: You can deploy both the service and its required infrastructure in a single workflow (a single kubectl apply). This means when a new feature requires an S3 bucket, that S3 definition is part of the same Pull Request (PR) as the application code, making dependencies clear and traceable.

3. Unified Management with ArgoCD

To automate the unified deployment workflow, we integrate ArgoCD.

ArgoCD’s Role: ArgoCD is a Continuous Delivery (CD) tool that automates deployment processes.

It monitors GitHub repositories for changes.

It applies these changes to target Kubernetes clusters.

ArgoCD has an internal reconciliation loop that continuously checks if the cluster’s state matches the desired state defined in Git. If there’s a discrepancy, it auto applies the changes.

Integration

ArgoCD eliminates the need for manual helm apply workflows.

It can automatically pull Helm charts from ECR repositories (including the OCI images we discussed earlier).

Crucially, ArgoCD works seamlessly with Crossplane. Under the hood, Kubernetes, powered by Crossplane, will deploy resources into AWS (or other cloud providers).

4. Packaging Crossplane Modules (Enforcing Best Practices)

Even with Crossplane, there’s a risk of developers defining resources in a non-compliant or insecure way (e.g., creating public S3 buckets without versioning). To combat this, we apply the same modularization concept used for Helm charts to Crossplane infrastructure definitions.

The Solution: We move common Crossplane infrastructure definitions (such as standard S3 buckets or RDS instances) into the central “platform-infra” repository.

Infrastructure as Subcharts: Similar to service Helm charts, these infrastructure definitions become subcharts. When a developer needs an S3 bucket, they use this pre-defined, centrally managed subchart and provide specific values.

Version Control for Infrastructure: Any required optimizations or changes (e.g., enforcing public access blocks or adding ignore public ACL settings) are made in this central definition. A new version is pushed, and services simply update their dependency version. This ensures that all consuming services inherit the updated, compliant configuration automatically. Developers now submit infra changes via PRs, just like app code.

Impact:

Modularized Infrastructure: Infrastructure definitions are modularized and reside in a single source.

Enforced Best Practices and Compliance: This approach reduces repetition, enforces best practices, and makes maintenance much easier. Compliance problems can be addressed in one place.

Reduced Developer Intervention: System-level changes become simple version upgrades for developers, reducing the need for direct intervention.

5. Composition for Crossplane (Advanced Enforcement & Abstraction)

For even stricter enforcement and greater abstraction, Crossplane Composition offers a powerful solution. This is often what platform engineers will be most familiar with.

What is Composition?: Composition allows you to define a custom resource (CRD) in Kubernetes that internally translates into multiple other underlying resources.

Instead of developers directly defining

s3.aws.upbound.io/v1beta2from official documentation, you create your own custom resource (e.g.,bucket.platform-infra.com/v1).A composition.yaml then specifies that when someone creates a

bucket.platform-infra.com/v1resource, it will automatically create an Upbound S3 bucket, enforce public access blocks, define bucket ACLs, and so on.Developers then use a simplified YAML for a “bucket claim,” only providing essential details like service name, region, and tags, without needing to know the underlying cloud-specific details or Upbound resources.

What This Unlocks:

Stronger Best Practice Enforcement: When the CRD is applied to the cluster, all existing and new claims automatically inherit and enforce the defined best practices and improvements. This means platform engineers can auto-apply improvements without asking developers to make changes.

Reduced Registry Dependency: For simpler compositions, you might not even need a container registry to deploy changes.

Clear Boundaries and Empowerment: This approach clearly defines roles:

Developers: Use pre-defined modules from the platform team. They can contribute to the “platform-infra” infrastructure module definitions (with approval). The application code and infrastructure code are now part of the same PR, making it clear where a feature’s infrastructure comes from.

Platform Engineering Team: Responsible for creating and managing the Kubernetes cluster, maintaining and approving infrastructure and service modules, and enforcing best practices within these modules. This gives the platform team control while empowering developers to contribute features more easily.

In the end, the project structures look like this:

service repo

├── infra/ # Infrastructure configuration (Helm-based)

│ └── charts/

│ ├── templates/

│ │ ├── ecr.yaml # Template for custom ECR definition via Crossplane

│ │ └── s3.yaml # Template for custom S3 bucket definition

│ ├── .helmignore

│ ├── Chart.yaml # Helm chart metadata with micro-service subchart as dependency

│ ├── Chart.lock # Helm chart dependency lock file

│ ├── values-dev.yaml # Environment-specific values (dev)

│ └── values-prod.yaml # Environment-specific values (prod)

├── src/ # Application source code

platform-infra (central repo)

├── .github/ # GitHub workflows, PR templates, etc.

├── cluster/ # Kubernetes cluster-level configs

├── crds/ # Custom Resource Definitions for Crossplane

│ └── s3/

│ ├── bucket-composition.yaml # Crossplane Composition for S3

│ └── bucket-crd.yaml # Custom Resource Definition for S3

├── examples/ # Example usage configs for consumers

├── helm-charts/ # Centralized Helm chart modules

│ └── micro-service/

│ ├── templates/ # Helm templates for microservices

│ ├── .helmignore

│ └── Chart.yaml

│ ├── rds/ # Helm chart module for RDS

│ └── s3/ # Helm chart module for S3This holistic approach allows organizations to achieve a truly streamlined DevOps process by bringing infrastructure and service management into a unified, version-controlled, and automated flow. It clarifies responsibilities, enforces best practices, and ultimately makes deploying new features faster and more reliable.